About The Book

Hands-On Machine Learning with Scikit-Learn and TensorFlow.

by Aurélien Géron.

To get the latest release : Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow, 2nd Edition [Book] (oreilly.com)

Objective and Approach:

This book assumes that you know close to nothing about Machine Learning. Its

goal is to give you the concepts, the intuitions, and the tools you need to actually

implement programs capable of learning from data.

Used Python Frameworks

Scikit-Learn:

- easy to use.

- it implements many Machine Learning

algorithms efficiently.

TensorFlow:

- more complex library for distributed numerical

computation using data flow graphs.

- makes it possible to train and run

very large neural networks efficiently by distributing the computations

across potentially thousands of multi-GPU servers.

Prerequisites

- Python programming experience

- NumPy,

Pandas, and Matplotlib.

- college-level math as well (calculus, linear algebra,

probabilities, and statistics).

Code Examples Notebooks

GitHub - ageron/handson-ml: A series of Jupyter notebooks that walk you through the fundamentals of Machine Learning and Deep Learning in python using Scikit-Learn and TensorFlow

----------------------------

Chapter 1. The Machine Learning

Landscape

What Is Machine Learning?

Machine Learning is the science (and art) of programming computers so they

can learn from data.

Engineering-oriented definition:

A computer program is said to learn from experience E with respect to some

task T and some performance measure P, if its performance on T, as measured

by P, improves with experience E.

Tom Mitchell, 1997

- For example, your spam filter is a Machine Learning program that can learn to

flag spam given examples of spam emails (e.g., flagged by users) and examples

of regular (nonspam, also called “ham”) emails.

- The examples that the system

uses to learn are called the training set. Each training example is called a

training instance (or sample).

Why Use Machine Learning?

how you would write a spam filter using traditional programming

techniques:

1- First you would look at what spam typically looks like.

2- You would write a detection algorithm for each of the patterns that you

noticed, and your program would flag emails as spam if a number of these

patterns are detected.

3- You would test your program, and repeat steps 1 and 2 until it is good

enough.

Since the problem is not trivial, your program will likely become a long list of

complex rules.

In contrast, a spam filter based on Machine Learning techniques automatically

learns which words and phrases are good predictors of spam by detecting

unusually frequent patterns of words in the spam examples compared to the ham

examples.

Another area where Machine Learning shines is for problems that either are too

complex for traditional approaches or have no known algorithm

- speech and text recognition .

- image classification .

- stock prediction.

- etc.

data mining

Applying ML techniques to dig into large amounts of data can help discover

patterns that were not immediately apparent.

__________________

Types of Machine Learning Systems:

- Whether or not they are trained with human supervision (supervised,

unsupervised, semisupervised, and Reinforcement Learning)

- Whether or not they can learn incrementally on the fly (online versus batch

learning)

- Whether they work by simply comparing new data points to known data

points, or instead detect patterns in the training data and build a predictive

model, much like scientists do (instance-based versus model-based

learning)

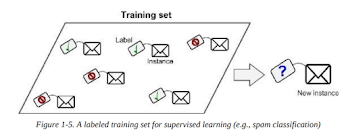

- Supervised learning: In supervised learning, the training data you feed to the algorithm includes the

desired solutions, called labels

- A typical supervised learning task is classification.

- Another typical task is to predict a target numeric value, such as the price of a

car, given a set of features This sort

of task is called regression.

some of the most important supervised learning algorithms:

- k-Nearest Neighbors

- Linear Regression

- Logistic Regression

- Support Vector Machines (SVMs)

- Decision Trees and Random Forests

- Neural networks

2

----------------------------

- Unsupervised learning

In unsupervised learning the training data is unlabeled The system tries to learn without a teacher.

some of the most important unsupervised learning algorithms:

- Clustering

k-Means

Hierarchical Cluster Analysis (HCA)

Expectation Maximization

- Visualization and dimensionality reduction

Principal Component Analysis (PCA)

Kernel PCA

Locally-Linear Embedding (LLE)

t-distributed Stochastic Neighbor Embedding (t-SNE)

- Association rule learning

Apriori

Eclat

For example, say you have a lot of data about your blog’s visitors. You may

want to run a clustering algorithm to try to detect groups of similar visitors.

----------------------------

- Semisupervised learning

Some algorithms can deal with partially labeled training data, usually a lot of

unlabeled data and a little bit of labeled data.

* Google Photos, are good examples of this.

Once you upload all your family photos to the service, it automatically

recognizes that the same person A shows up in photos 1, 5, and 11, while

another person B shows up in photos 2, 5, and 7.

* Most semisupervised learning algorithms are combinations of unsupervised and

supervised algorithms.

----------------------------

- Reinforcement Learning The learning system, called an

agent in this context, can observe the environment, select and perform actions,

and get rewards in return (or penalties in the form of negative rewards)

* For example, many robots implement Reinforcement Learning algorithms to

learn how to walk.

----------------------------

- Batch learning the system is incapable of learning incrementally: it must be

trained using all the available data. This will generally take a lot of time and

computing resources, so it is typically done offline.

* If you want a batch learning system to know about new data, you need to train a new version of the system from scratch on the full

dataset

----------------------------

- Online learning you train the system incrementally by feeding it data

instances sequentially, either individually or by small groups called minibatches. Each learning step is fast and cheap, so the system can learn about new

data on the fly, as it arrives.

* Online learning is great for systems that receive data as a continuous flow (e.g.,

stock prices) and need to adapt to change rapidly or autonomously

* Online learning algorithms can also be used to train systems on huge datasets

that cannot fit in one machine’s main memory (this is called out-of-core

learning). The algorithm loads part of the data, runs a training step on that data,

and repeats the process until it has run on all of the data

* One important parameter of online learning systems is how fast they should

adapt to changing data: this is called the learning rate. If you set a high learning

rate, then your system will rapidly adapt to new data, but it will also tend to

quickly forget the old data.

* if you set a low learning rate, the

system will have more inertia; that is, it will learn more slowly, but it will also

be less sensitive to noise in the new data or to sequences of non representative

data points.

* A big challenge with online learning is that if bad data is fed to the system, the

system’s performance will gradually decline. If we are talking about a live

system, your clients will notice.

For example, bad data could come from a

malfunctioning sensor on a robot, or from someone spamming a search engine to

try to rank high in search results.

- To reduce this risk, you need to monitor your

system closely and promptly switch learning off (and possibly revert to a

previously working state)

----------------------------

- Instance-based learning

Instead of just flagging emails that are identical to known spam emails, your

spam filter could be programmed to also flag emails that are very similar to

known spam emails. This requires a measure of similarity between two emails.

A (very basic) similarity measure between two emails could be to count the

number of words they have in common. The system would flag an email as spam

if it has many words in common with a known spam email.

This is called instance-based learning: the system learns the examples by heart,

then generalizes to new cases using a similarity measure.

----------------------------

- Model-based learning

Another way to generalize from a set of examples is to build a model of these examples, then use that model to make predictions. This is called model-based learning

----------------------------

Main Challenges of Machine Learning:

two things that can go wrong are “bad algorithm” and “bad data.”

- Insufficient Quantity of Training Data

- Nonrepresentative Training Data

- Poor-Quality Data

- Irrelevant Features

- Overfitting the Training Data

- Underfitting the Training Data

----------------------------

Testing and Validating The only way to know how well a model will generalize to new cases is to

actually try it out on new cases.

* A better option is to split your data into two sets: the training set and the test set.

As these names imply, you train your model using the training set, and you test it

using the test set. The error rate on new cases is called the generalization error

(or out-of-sample error), and by evaluating your model on the test set, you get an

estimation of this error.

* when you measure the generalization error multiple times on the

test set, and you adapted the model and hyperparameters to produce the best

model for that set. This means that the model is unlikely to perform as well on

new data.

A common solution to this problem is to have a second holdout set called the

validation set. You train multiple models with various hyperparameters using the

training set, you select the model and hyperparameters that perform best on the

validation set, and when you’re happy with your model you run a single final

test against the test set to get an estimate of the generalization error.

Thanks For Reading

Muhammad Nassef

Good job 👌

ReplyDeletethanks bro <3

DeleteWaiting for more

Delete3azmaha xD <3

DeleteKeep going ❤️💯

ReplyDelete7apepy <3

Delete